When building experiences in virtual reality we’re confronted with the challenge of mimicking how sounds hit us in the real world from all directions. One useful tool for us to attempt this mimicry is called a soundfield microphone. We tested one of these microphones to explore how audio plays into building immersive experiences for virtual reality.

Approaching ambisonics with the soundfield microphone has become popular in development for VR particularly for 360 videos. With it, we can record from one fixed point and still match sounds accurately in all directions. This makes it convenient when editing your video as only one microphone needs to be removed from the visuals rather than several placed around the scene. Ambisonic audio is also useful as a background ambience for VR experiences rendered in game engines like Unity. This technology has been with us since the 1970s, courtesy of Michael Gerzon and Peter Craven, but was largely a commercial failure. Its applicability for VR, however, has revived interest in this approach.

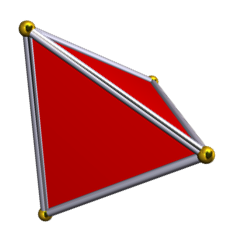

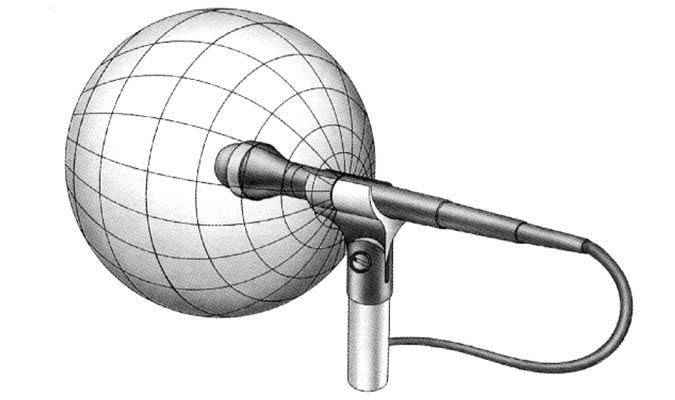

We tested Core Sound’s TetraMic and processed the audio gathered from this soundfield microphone into an ambisonic format usable for virtual reality. It’s designed conventionally with the four cardioid microphone capsules arranged in a tetrahedron. You can picture each one of these capsules picking up all the sound that’s directly in front of them in a kind of dome. When each of these records simultaneously, we’re getting audio information of the entire space with a moderate degree of overlap between the mics. This just gives us four distinct audio signals from the areas each capsule is facing. We need to bring this audio data together in a way that it can be relayed over two channels in headphones and shift as we move our head about the scene. Our format conversion to ambisonics will permit this.

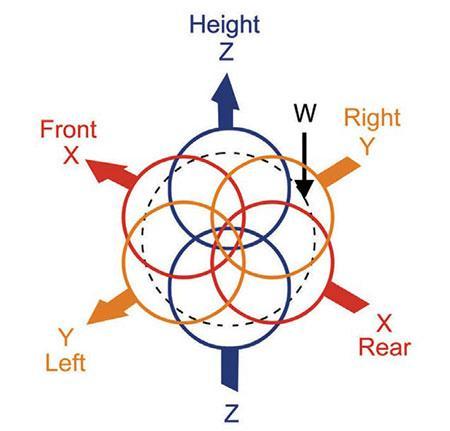

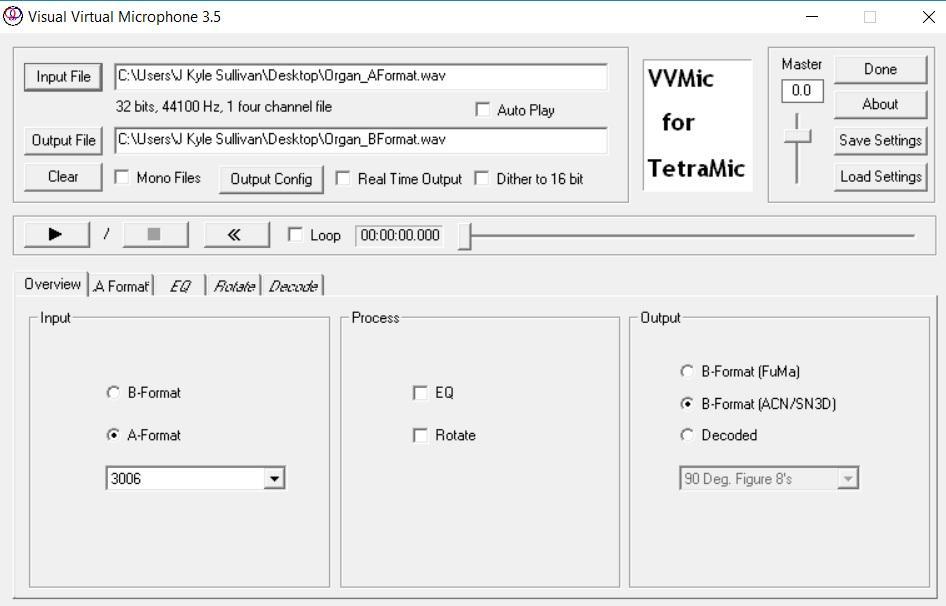

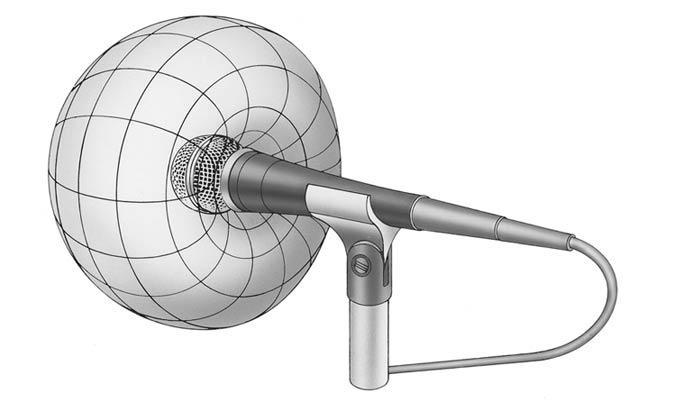

These four unprocessed signals are the A-Format. These need to be converted, usually by software provided by the microphone’s manufacturer, into the Ambisonic B-Format standard. The processing transforms these four separate signals into the three-dimensional soundfield, creating a kind of virtual sphere around the microphone. This gives us the flexibility to decode the format into any kind of polar pattern, to any number of audio signals we wish. The format consists of four different signals: W, X, Y and Z. The naming convention is derived from spherical harmonics, the functions which define the surface of a sphere. They’re simply ordered in the same way our axes would be in the right-hand coordinate system, so don’t read too much into the number of signals.

- W: simulating the output of an omnidirectional microphone, or full sphere.

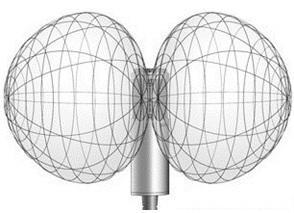

- X: front and back directionality, simulating a forward facing "figure of eight" microphone

- Y: left to right directionality, simulating a horizontal "figure of eight" microphone

- Z: up and down directionality, simulating a vertical "figure of eight" microphone

You can think of the overlapping signals in figure 1 as the totality of our soundfield in the B-Format, and the rotating polar patterns in figure 2 as the ways in which we can manipulate the directionality of audio contained in that soundfield by morphing the signals.

Conceivably this format can be decoded into any signal pattern, which is what makes ambisonics so flexible. Let’s picture our standard microphone, capturing sound only in the direction you’re pointing it in. With the information gathered from that mic, all we have is what’s directly in front of the capsule. With the soundfield microphone, because we’re now working with sound in all directions we can use a software to create virtual microphones. If I want to process the audio to focus on what’s directly behind me, I can use the software to isolate that portion of the sound field and render an audio file with that focused directionality. We never had a microphone pointing exclusively in that direction, but because I have the entire sound field captured I can isolate that source by creating this "virtual microphone."

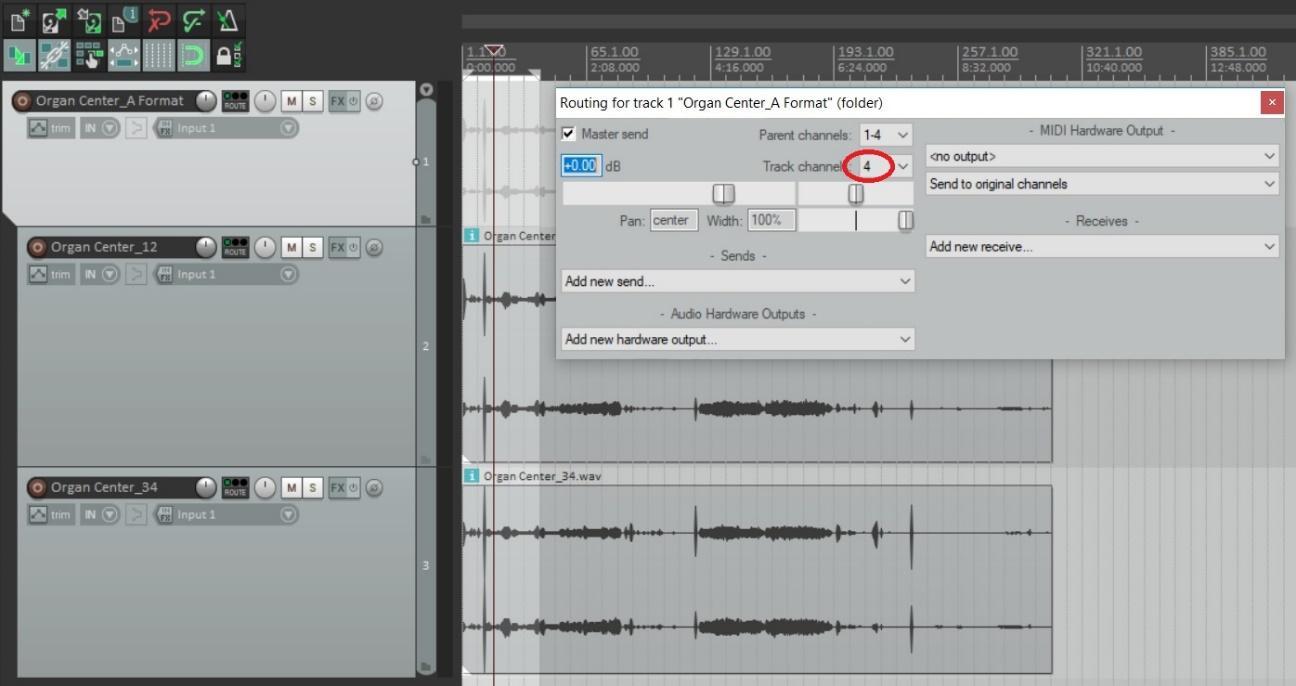

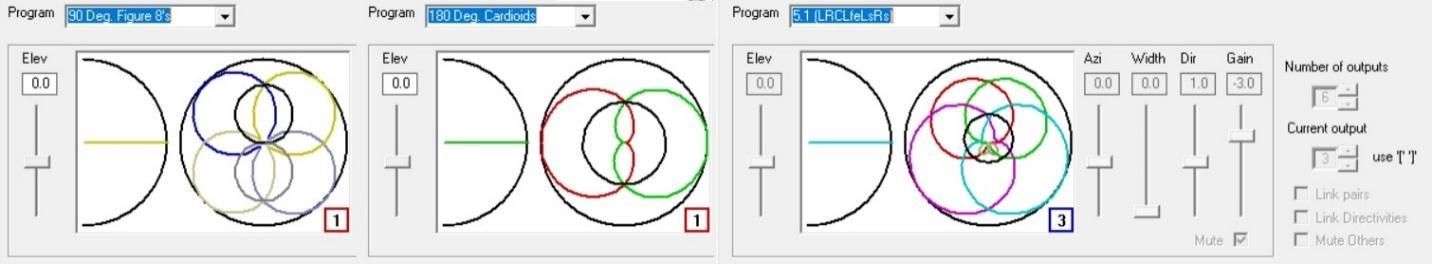

While soundfield microphones can get the process started for this configuration of audio, it’s not as simple as hitting record and there’s your virtual sphere. Most portable multi-channel recorders will compress audio to stereophonic format (two-channels). Using the Core Sound TetraMic with the TASCAM DR-701D, our four signals were compressed to two stereo files. Our first and second capsule signals will be rendered to one stereo file, while the third and fourth will be rendered to another. It’s important here to log at the beginning of a recording which direction each microphone capsule is facing for accurate positioning once you’re working with the compressed B-format file.

The Core Sound TetraMic includes a windows application calibrated specifically to the microphone, called VVMic. This software will take our four-channel file (A-format) as an input, and render an ambisonic file as its output (B-format). Getting from A to B might vary depending on the model microphone being used for the recording.

There’s been some variance on the standards for the calculations and formulas generating B-Format audio, but software developers have largely agreed upon ACN/SN3D. Simply put, this just describes the audio’s component ordering and normalization. Audio playback for VR in game engines like Unity, or over 360 video players on YouTube or Facebook will accept this standard. Their software will then read this format and decode in real-time to your headphones, or two channels, based one where you’re viewing.

You’ll notice the decode tab on our VVMic software. This gives us the option to decode our B-Format file to a specific number of outputs, or channels, depending on what our production needs are… and here lies the unique flexibility which makes our soundfield microphone so interesting. With the soundfield now contained in one file we can highlight sounds from any direction, creating "virtual microphones" from our capsule array, and output them to any number of signals.

So, to review, the soundfield microphone permits us a way in which we can record ambisonic audio to reproduce surround sound in full 360 degrees. We’ll process the audio gathered, first to one multichannel file and then to the ambisonic format. From there, we have audio which can then be placed into a 360 video or VR game engine which carries spatialized sound over two channels to your headphones and phases according to your movement. We’re just beginning our exploration of immersive audio for VR and would love to hear your thoughts on the subject. Please reach out to get the conversation going by reaching us @knightlab or contacting me directly @jwhitesu.

Pick-up Patterns

A big part of understanding spatial sound is understanding what different microphones actually "hear." Sound engineers know the polar (pick-up) patterns for all of the microphones in their kit. The pick-up pattern describes how sensitive a microphone is to sounds hitting it at different angles from its center. A diversity of polar patterns means that recording engineers have a variety of approaches to capturing audio in different directions.

- Omnidirectional

- equal sensitivity at every angle, picking up sound from all directions. This is ideal for capturing the environmental ambience from around a dominant sound source.

- Cardioid

- best for capturing sound directly in front of where it’s pointed. Positioning incorrectly relative to the sound source results in "off-axis" coloration, or a dull muted effect when the angle is off placement.

- Figure of Eight

- picks up sound from the front and the rear of where it’s been placed, muting sounds on either side. Useful for recording two sound sources at once, or as a component of stereo recording techniques.

A Spatial Sound Glossary

We know it can be challenging picking up the new vocab that goes with new technology, but it pays off to know the language. Here's a cribsheet.

- A-Format

- the first set of signals produced by a soundfield microphone, one from each of the capsules in the microphone array. These four signals give us the full scope of directional information necessary to create a "soundfield".

- B-Format

- the standard audio format for ambisonics, consisting of a spherical wave field around the microphone itself. Derived after processing the initial recording from the soundfield microphone through a software that phases A-Format signals together to produce directionality and depth. The typical naming convention of these signals are W, X, Y and Z. W is a sound pressure signal that mimics an omnidirectional microphone recording in all directions, while XYZ mimic figure-of-eight mics rigged along our three spatial axes (front-back, left-right, up-down).

- ACN/SN3D

- method of channel ordering and normalization, currently the most common data exchange format for ambisonic audio. Stands for "Ambisonic Channel Number" and “Schmidt semi-normalization.”

- Ambisonics

- a "full-sphere" surround sound technique that covers sound sources in three dimensions. Carries no distinct audio signals, but rather a representation of a sound field encoded into “B-format” audio. This audio can be decoded to derive “virtual microphones,” pick-up patterns which localize sounds in any direction.

- Audio Signal

- what is passed from the microphone to a loudspeaker or recording device, carried over an audio channel. In stereo, for instance, we receive two distinct audio signals over left and right audio channels.

- Soundfield Microphone

- a microphone which typically has four closely spaced cardioid capsules arranged in a triangular pyramid or tetrahedron. Captures sound in all directions by using these four distinct signals to recreate a three-dimensional soundfield.

The featured image for this article is CC BY 3.0 and originally appeared on "Resonance Audio: Fundamental Concepts", published on the Google Developers website.

About the author