This story is part of a series on bringing the journalism we produce to as many people as possible, regardless of language, access to technology, or physical capability. Find the series introduction, as well as a list of published stories here.

In 2004, human-computer interaction professor Alan Dix published the third edition of Human-Computer Interaction along with his colleagues, Janet Finley, Gregory Abowd, and Russell Beale. In a chapter called “The Interaction,” the authors wrote a section on natural language that ran about a page within the roughly 40-page chapter.

“Perhaps the most attractive means of communicating with computers, at least at first glance, is by natural language,” they wrote. “Users, unable to remember a command or lost in a hierarchy of menus, may long for the computer that is able to

understand instructions expressed in everyday words!” The possibilities for accessibility here are obvious. An interface that doesn’t depend on users being able to recall specific commands or methods of interaction means the interface is by its nature accessible: each person uses the system in his or her own way, so every use case is accounted for. Users no longer have to translate their intents to actions. Now the intent is the action.'Perhaps the most attractive means of communicating with computers, at least at first glance, is by natural language.'

The book section doesn’t end as optimistically: “Unfortunately, however, the ambiguity of natural language makes it very difficult for a machine to understand…. Given these problems, it seems unlikely that a general natural language will be available for some time.”

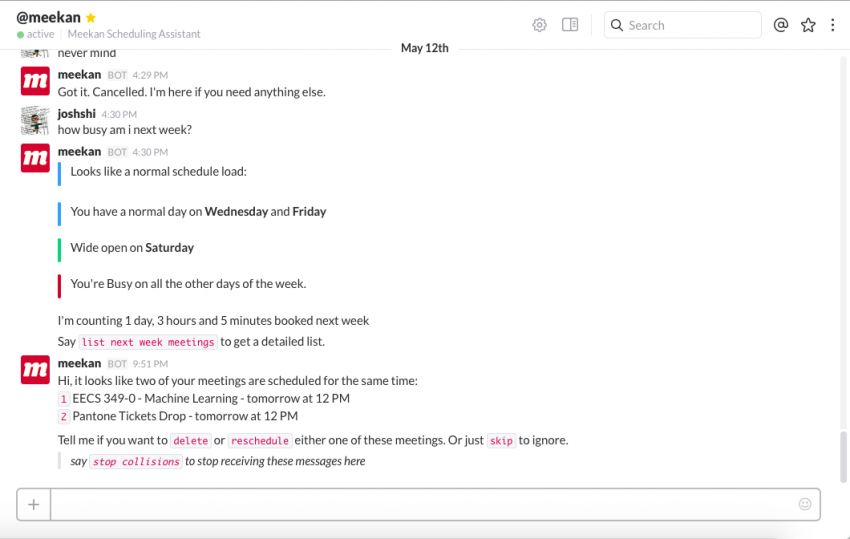

Less than 15 years later, conversational interfaces have crept into every facet of our everyday lives, with Slack bots available for anything from setting up meetings across teams to managing your to-do lists to helping you play werewolf in your channels. News has seen its fair share of conversational UI too: Quartz’s conversational news app delivers headlines to your phone that you respond to with pre-defined chat messages (although on the other end is a human being writing those snippets rather, not a robot), TechCrunch has launched a Messenger bot to personalize the tech news you get, and there’s even NewsBot, a Google Chrome extension that allows you to curate the news stream you want to get from a variety of sources.

To be fair to Dix et al., they weren’t necessarily talking about chat bots when they said that natural language interaction was a far way off. When they stressed the challenges presented by building a natural language interface, they mostly meant an interface capable of understanding anything at all, rather than a restricted subset of our natural language. Chat bots depend on defining a set of commands and actions that the bot can interpret. There may be some leeway when the bot interprets imprecise or vague language like “next week” or “with George,” but ultimately the bot’s capabilities are well-defined by the developer.

An interface that doesn’t depend on users being able to recall specific commands or methods of interaction means the interface is by its nature accessible: each person uses the system in his or her own way, so every use case is accounted for. Users no longer have to translate their intents to actions. Now the intent is the action.

Still, a future where artificial intelligence systems are able to interpret a wide range of natural commands by voice doesn’t seem too far off. Companies have already started investing heavily into natural language processing and the machine learning to support its functions. Apple’s Siri, Google’s Google Now, and Amazon’s Alexa (the AI that lives in the Echo) all interpret voice commands and act as personal assistants, and their features and functionalities only improve as more people use them. Opportunities to support blind users are being explored as we speak, with huge potential as conversational UI seeps further into our everyday lives.

Before we can discuss accessibility in conversational interfaces, however, it’s important to note where these interfaces excel, especially in comparison to graphical interfaces. Conversational interfaces are very good for doing one thing at a time when you know what you want. It would be difficult for a bot to do something like help you compiling research before writing an article because that typically requires a lot of browsing, listing of multiple sources at once, and cross-referencing between sources. But if you want to book a flight to London for next Tuesday or know who won the primary in your state, however, that’s something a bot could handle gracefully. Like in a real conversation, you ask someone a question, the person you’re talking to responds with an answer, and the two of you may deliberate or go back and forth before you arrive at a mutual answer.

Where conversational interfaces really shine, however, and the reason these interfaces have been utilized so much by bots

and are so closely related to the field of artificial intelligence, is that If you asked a human being for a recommendation for a place to eat next week, there are a number of facts that would make it easier for a person to answer: where you were, what kinds of food you liked, any allergies you had, how much like to spend, etc.You can get better, more helpful answers the more your bot knows about you and the context of your request.

Matty Mariansky, co-founder and product designer at Meekan, a Slack bot that schedules meetings across teams, describes the ideal bot as being “a search engine that gives you one result.”

“Searching Google, instead of getting 20 pages of results, you would only get the one perfect result that is perfect for you and the time you are asking and the location you are in, perfect for your situation at this specific moment you are in,” Mariansky says. “It knows everything about you and it gives you the one single result you can trust. Replace that with anything. Any type of application you’re looking for, this would be the endgame, this is where it should go.”

This kind of insight is not necessarily unique to conversational interfaces. However, it is a key component in keeping up the conversational illusion that the robot you’re talking to is actually a knowledgeable human being that you can trust. And that’s where the biggest gain in accessibility comes in.

In removing a graphical user interface and replacing it with a message field or even just a voice, users are placed in a much more familiar context. They may not know exactly what they can ask the robot to do (that’s something that’s up to the bot to lay out when introducing itself), but they understand the input and can specify what they want in whatever way feels most comfortable to them. Users feel more natural getting feedback from the system, whether that be in the form of an error message (“Sorry, I don’t know how to do that”) to a confirmation (“Okay, Wednesday at 11am it is then”).

One of the key tenants of accessibility is creating a single experience for everyone, something that all people use in the same way regardless of their situation. And through conversational UI and natural language, accessibility will be coming to many more people very soon. Though it’s not perfect (in its current state, deaf users may struggle to use an Amazon Echo without hearing aids depending on the severity of their disability, and conversational interfaces like the Quartz news app that continue to rely on graphical user interfaces as displays would be less accessible to the blind), it does provide a better jumping-off point than pure GUI. Modifying the Quartz app to communicate through audio for blind users or implementing a graphical component of the Echo’s speech would yield a more faithful recreation of the original interface than a screen reader could of a website, just by the very nature of the content that’s represented in each.One of the key tenants of accessibility is creating a single experience for everyone, something that all people use in the same way regardless of their situation.

Conversations are a great medium for a more natural-feeling interface because we’re used to conversing every day. In a conversation, the two parties have a certain level of intimacy—they know what the other has been through and what they’re trying to do. And when you understand these goals and motivations, you can provide them with the best answer they need.

About the author