As data journalists, we tend to focus on visualizing our numbers beautifully for desktops. We pour over D3.js line charts and spend hours getting the tooltips on our maps just right. And right before our deadlines, we’ll throw in some CSS media queries for mobile screens and call it a day. I know I’ve been a culprit more than once.

One of my favorite sessions was Aaron William’s MozFest session “Crafting new visualization techniques for mobile web” where he emphasized a mobile-first, desktop-second focus.

https://twitter.com/aboutaaron/status/663006106701180929

This seems like an obvious concept, especially in a time when desktops have shrunk to the size of your palm and phone screens have grown to the size of your face. But I realized that I always think of mobile design as an obstacle to what I’ve already created, even though many readers use mobile devices as their number one method of absorbing content.

So we started off the session by making a few short lists:

Some types of data visualizations:

- Maps (cloropleth, heat)

- Bar graphs

- Timelines

- Small multiples

- Tables

- Line charts

Advantages of mobile phone:

- Multiple touch functionality + force touch with iPhone 6S

- GPS system

- Portability

- Normal and front-facing cameras

- Vibration

- Screen rotation

Disadvantages of mobile phone:

- Thumb size affecting touch

- Small screen size

- Battery life

- Bandwidth and weak internet connections

- No hover functionality

- No mouse

- Shorter time and attention span given from audience

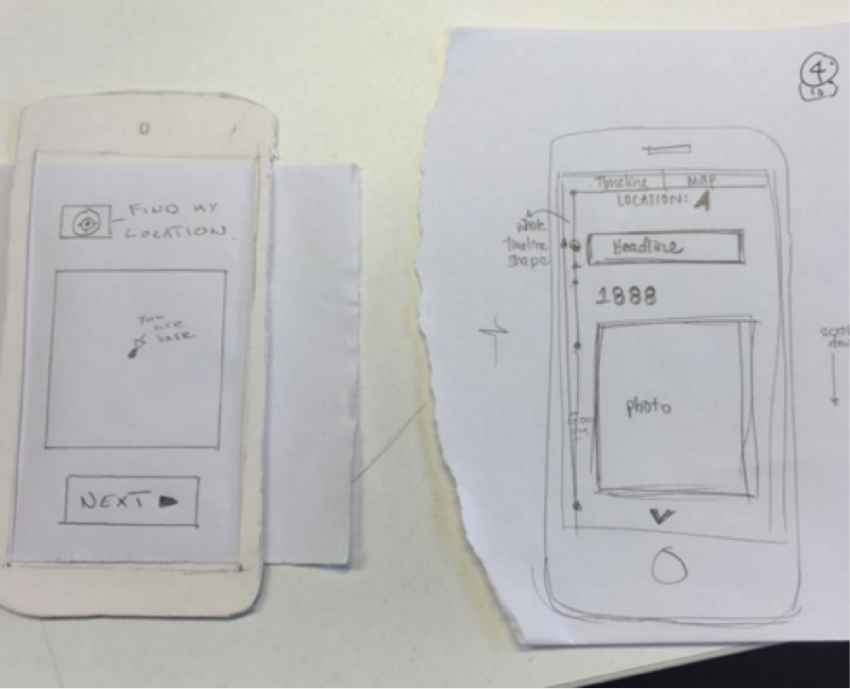

The task was to solve a disadvantage or to play up an advantage of data viz on mobile web. My group chose the phone’s GPS system to work with a combination of maps and timelines. A few members of the group had been working on a project called Histropedia, a tool to help users understand the historical timeline about their current location. These are the sketches we came up with.

A few other groups tackled timelines as well, presenting the option of showing the timeline as a whole but maybe in truncated sections, a vertical orientation or even a “snakes and ladders” curving situation. We stuck to displaying one timeline card at a time. Each card would theoretically be accompanied by a small line graph of the whole timeline to symbolize the differences in length of time between events or clusters of many events around a short period of time.

Aside from the fun paper phones and great brainstorming, the session helped restructure my perception of data viz and made me think about how my previous projects might have been different with a mobile-first view. Without some qualities exclusive to desktops, we have the opportunity to simplify and detangle our information graphics to focus both our readers and also our storytelling. And I think by thinking mobile first, we can even explore future options of data viz that are ideally suited to a mobile interface and more sensitive to a user’s location, capitalize on the multiple touch function or include vibration qualities that may cater to visually impaired audiences.

About the author