We’re pleased to announce JuxtaposeJS, a new Knight Lab tool that helps journalists tell stories by comparing two frames, including photos and gifs.

JuxtaposeJS is an adaptable storytelling tool and is ideal for highlighting then/now stories that explain slow changes over time (growth of a city skyline, regrowth of a forest, etc.) or before/after stories that show the impact of single dramatic events (natural disasters, protests, wars, etc.).

For example, check out this NASA image of the sun in its normal state and during a solar flare:

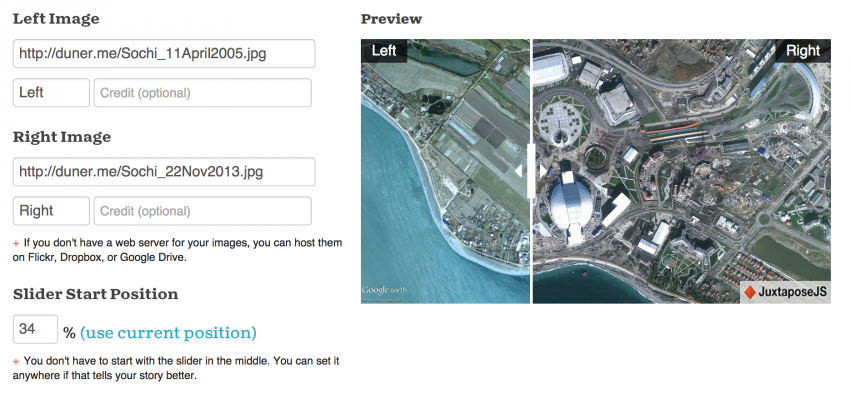

JuxtaposeJS is free, easy to use, and open source. Almost anyone can use JuxtaposeJS, so long as you’ve got links to two similar pieces of media (hosted on your own server or on Flickr). Once you've got the links, all you need to do is copy and paste the URLs into the appropriate fields at Juxtapose.knightlab.com, modify labels as you see fit, add photo credits, and then copy and paste the resulting embed code into your site.Before we built Juxtapose, I looked at dozens of examples of before/after sliders and tried to take the best of each. With JuxtaposeJS you can modify the handle's start position to highlight the area of change and choose to click the slider instead of dragging. It works on phones and tablets with the touch or swipe of a finger.

You've probably seen similar tools elsewhere. They work well, but we built JuxtaposeJS without relying on jQuery, which makes the tool more lightweight, flexible, and adaptable. It’s also accessible to any newsroom or journalist, regardless of technical skills. Simply fill in the forms at Juxtapose.knightlab.com to build your slider. It's that easy.

JuxtaposeJS joins four other tools in Publishers' Toolbox, which we hope makes the suite of tools even more useful for journalists looking for quick-to-deploy, easy-to-use storytelling tools.

We hope you’ll find JuxtaposeJS useful and use it to make your stories more interesting and informative. If you use it, share your work with us at knightlab@northwestern.edu or @KnightLab.

About the author